Distrust of science is nothing new. But it has grown with the use of social networks, and the health crisis has further exacerbated the situation. Medicine must respond by adapting within an uncompromising ethical framework.

Over the next 40 forty years, science is likely to produce more knowledge than humans have created in all their history, says American author Shawn Lawrence Otto in his book The War on Science. But the author also regrets that, these days, when the world needs scientists most, the idea of objective knowledge itself is under attack. The Science Barometer Switzerland reports that 21% of people surveyed believe that the number of deaths from the coronavirus is deliberately exaggerated by the authorities. Almost 16% believe that powerful people have planned the pandemic and 9% even doubt that there is any evidence to prove the existence of the novel virus. How are we supposed to interpret that defiance, and what can we do about it? Below is our explanation in six points.

The perception of science is strongly influenced by social media. Inaccurate or even misleading information goes viral online, fuelling the dissemination of conspiracy theories. And it doesn’t help that Facebook and Twitter algorithms steer users towards content they already adhere to. This reinforces prejudices and weakens the development of critical thinking. It also blurs the line between scientific fact and opinion, as shown in the recent successful docu-drama The Social Dilemma by Jeff Orlowski.

The designers of these digital tools, frightened by what they themselves have created, warn of the danger of these platforms, which encourage users to lose control of their beliefs and can even change their behaviour. Science is becoming a subject where anyone can state their own truth, thus endangering the shared truth and the consensus it is built on.

However, in Switzerland, opinion surveys have shown since the 1970s that the population’s trust in science remains quite good, and often much better than scientists imagine, says Bruno Strasser, science historian and professor at the University of Geneva. Researchers have a much more positive image than that of politicians, the media or heads of multinationals, for example. “Trust can be fragmented,” he says. “You can be opposed to one type of vaccine while in favour of chemotherapy. These nuances are sometimes perceived as a distrust of science as a whole, but it’s not the case at all.”

What has changed is that people now have spaces for dialogue about science and medicine, Bruno Strasser says. “For better or for worse. But these spaces have the merit of bringing out a form of critique.” There should be more room for doubt and uncertainty in scientific communication, which would strengthen the public's trust in science. “In the past, discoveries were often presented as being based on scientific consensus, while neglecting the uncertainty factor that is inherent in science, which is its strength.”

The Covid-19 crisis created a totally unprecedented situation: the public had the opportunity to follow the construction of scientific knowledge about the virus, as if they were watching a reality TV show. The public witnessed, in real time, all the questions surrounding the pandemic. By nature, this path forges through doubts, challenges and corrections, for example about wearing face masks or the effectiveness of certain drugs. “The general public has not necessarily understood that these contradictions are part of the scientific process, thinking that a research result is valid forever,” says Bruno Strasser. We are now paying for the past mistakes of scientific communication.”

This crisis, marked by a loss of trust in scientists, who are often perceived as an elite disconnected from the concerns of the people, is not new. It goes back to the 1960s, after the two world wars. The use of poison gas and the atomic bomb gave rise to an image of science geared against the common good. After that, the uprisings and counter-culture movements of the late 1960s challenged all forms of authority. Making the situation worse were major technological and industrial accidents, such as Chernobyl. Scientific advances in genetic engineering and the development of pesticides have also contributed to these controversies because of the ethical issues they raise.

The development of knowledge has always been accompanied by a critical eye, especially when science is expressed in techniques, Bruno Strasser explains. As early as the 18th century, there were revolts against machines. Post-World War II, between 1945 and 1975, critical voices spoke out about the negative consequences of scientific and technological advances on the environment and society.”

Science has proven itself to be trustworthy, says Philippe Huneman, director of research with the Institute of the History and Philosophy of Science and Techniques at the Sorbonne. “Acquiring knowledge is the best tool for understanding the real world and being able to influence it. It’s perfectly rational to rely primarily on this field to establish the beliefs that underlie our actions.”

The best way to produce science is to justify it with its procedures, methods and standards. “The procedures that guide science are reliable because they are founded on social organisation. That’s what Robert Merton, one of the fathers of the sociology of science in the 1950s, defined as organised scepticism. Research is not the affair of one individual and isolated scientific activity. Even great scientists such as James Watt and Albert Einstein worked within a scientific community structured within standardised procedures.”

This organised scepticism is upheld through conferences, debate institutions, and peer review, an examination by independent experts in the field, Philippe Huneman says. Doubt isn’t simply about saying “this is all nonsense”. “You must provide justified reasons to be taken seriously when you express a doubt. But it’s not always easy to distinguish between conspiracy theory and well-founded suspicion of dubious scientific practices.”

Another safeguard against scientific misconduct is that the slightest breach of ethics comes at a high cost. Any data fraud can ruin a career. “Therefore, it is legitimate to maintain a reasonable degree of trust in science as a producer of truth. Provided, of course, that the social organisation works properly.”

At present, this evaluation process works rather well, even though it could do with improvement in certain sectors, Philippe Huneman points out. “There’s always a risk of bias in the results, mainly due to funding. In some disciplines, the economic interests at stake are astronomical. This is the case in the medical sciences. For example, funding for research projects can come from the food industry, which is obviously not objective. Some studies on food in scientific nutrition journals are 55% favourable to the industry’s products, compared with only 9% when the research is conducted independently*.

Biases can also influence research and its results. Philippe Huneman cites the example of malaria, one of the main causes of death in tropical countries. For a long time, this disease received very little attention and, more importantly, little funding compared to Western diseases.

Another problem is that scientific research is often based on an androcentric perspective, i.e. based exclusively on a male point of view, consciously or not, the expert says. “Conditions affecting white men, such as baldness, are studied excessively compared to diseases that exclusively affect women, such as endometriosis.” He also reminds us that, for a long time, scientific theories on the biology of reproduction were sexist and not very objective, as philosopher Elisabeth Lloyd has highlighted. She has shown that explanations of the female orgasm in evolutionary biology have been systematically linked to the idea that a woman’s sexual function is limited to reproduction.

An additional factor that can betray scientific objectivity is the pressure on researchers to publish, the famous “publish or perish” dilemma. “This ultra-competitive system encourages behaviour that opposes the scientific approach,” Philippe Huneman laments, referring to the development of so-called predatory journals, which publish anything and everything to collect large publication fees.

In his book Malscience : de la fraude dans les labos on research misconduct, Nicolas Chevassus-au-Louis describes a fiercely competitive scientific community where truth is moulded into what scientists are trying to demonstrate. “Scientific misconduct can involve data fabrication, falsification or even plagiarism,” the trained biologist explains, pointing out that 2% of researchers admitted, in anonymous interviews, to having invented or falsified data at least once in their career. This would come to a substantial 140,000 scientists worldwide. “In fact, the number of retractions in the scientific literature, largely related to errors in the results, has increased tenfold since the 1980s.”

Of the natural sciences, misconduct is particularly common in biology and medicine. This is due to the often small size of research teams and laboratories. As well as the huge amounts of money at stake. “These fields are among the weakest from an epistemological point of view,” Nicolas Chevassus-au-Louis says. “It’s therefore more difficult to reproduce an experiment, and it can be more tempting to alter the results.”

The investigator reports that currently one in five studies is embellished and stresses the challenges of drawing a clear line where tweaking certain results ends and fraud begins. He also notes that next to no failed studies are published. “Nowadays, 90% of researchers find positive results. As they must continually justify their funding, they obviously have an interest in finding what they were looking for.”

Nicolas Chevassus-au-Louis argues that this explosion of scientific misconduct is driven by more intense international scientific competition, particularly with the arrival of Chinese and Indian researchers on the market, the ever-present scarcity of funding for laboratories, and tighter deadlines for projects. Journals that evaluate their researchers based on impact factor – the number of times an article will be cited after publication – are also responsible for current excesses.

During the pandemic, due to the urgency to find solutions and explanations for the virus, a frenzy of pre-publications emerged – work that had not even been peer-reviewed – with lower quality control from the scientific community. Research should be better protected against these breaches, Nicolas Chevassus-au-Louis insists. “Science remains the best method for achieving verifiable knowledge about the world. There is no reason to doubt science per se. But it must operate be based on valid, rational criteria.”

To avoid this type of misconduct, scientific research needs to slow down. For example, solution could be implemented to change rules on funding allocation and researcher evaluation, which is currently an essentially quantitative metric based on the number of papers published. This approach can lead to fraud, driving researches to publish more and faster. “As for funding and promotions, researchers could be asked to submit their three most significant publications. The evaluator would take the time to read them for a more qualitative evaluation.” Nicolas Chevassus-au-Louis also advocates for improving the education of young researchers by going back to promoting the value of rigour and scientific integrity. /

* Sacks G, Riesenberg D, Mialon M, Dean S, Cameron AJ. The characteristics and extent of food industry involvement in peer-reviewed research articles from 10 leading nutrition-related journals in 2018. PLoS One. 2020 ;15(12) :e0243144. Published 2020 Dec 16. doi :10.1371/journal.pone.0243144

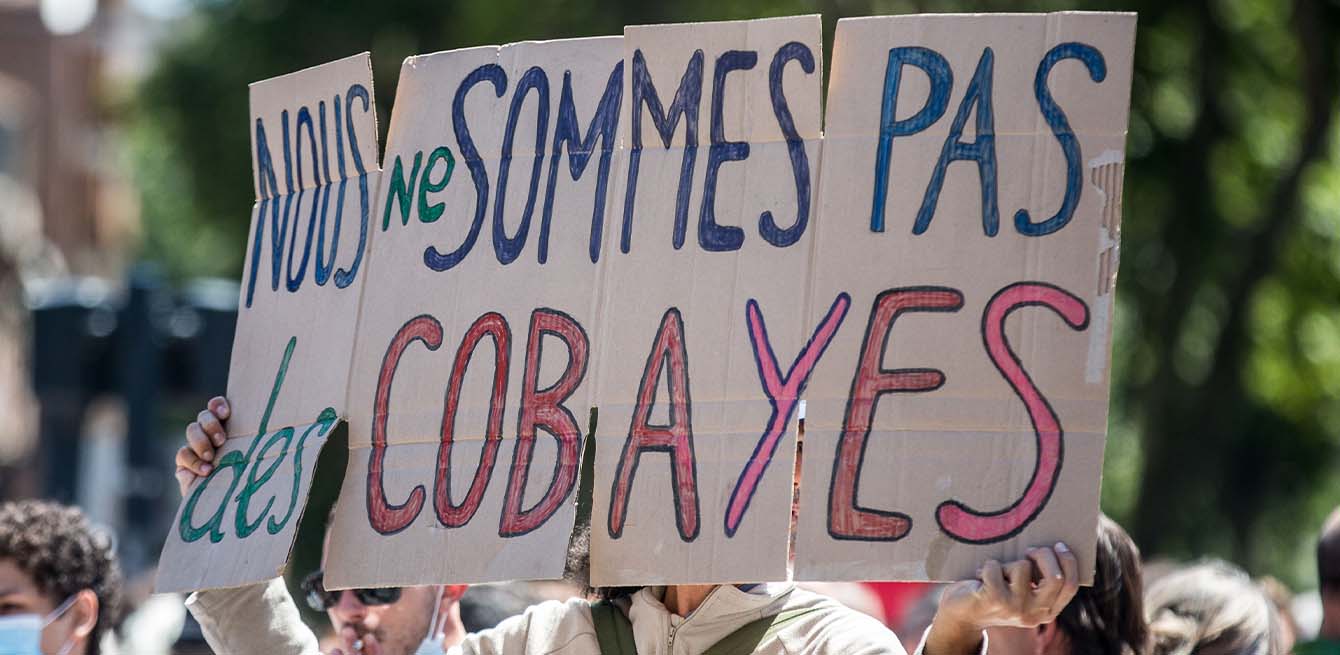

The introduction of a health pass and mandatory vaccination have sparked several demonstrations. Beyond their distrust of the vaccine, some people disagree with the way politicians have handled the pandemic.

Percentage of people who claim to have a strong interest in science and research.

/

Percentage of people who believe that scientific controversy helps to advance research.

/

Percentage of the population that wants political decisions about the pandemic to be informed by scientific knowledge.

The importance of science communication and dialogue between the public and the scientific community has been illustrated repeatedly in recent years, particularly during the pandemic. The Swiss Academies of Sciences asked the expert group “Communicating Sciences and Arts in Times of Digital Media” to identify ways to improve communication. Here are some of their recommendations:

Reinforce professional, social, psychological and legal support for scientists involved in public communication as well as for whistleblowers, to prevent personal attacks, especially against experts;

Encourage science communication and dialogue with underserved audiences;

Support participatory research initiatives; reflect scientific diversity, in terms of research areas, but also in terms of researchers’ experience, age, gender and ethnic origin;

Create new infrastructure to support science journalism, including a range of funding sources to ensure the independence of these media.

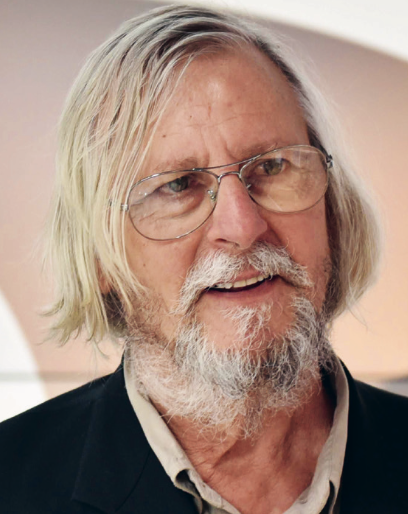

French microbiologist Didier Raoult faced disciplinary action in December from the National Council of the Order of Physicians for giving false information about hydroxychloroquine. The director of the University Hospital Institute for Infectious Diseases in Marseille promoted a treatment whose effects on Covid-19 had not been proven.

Many doctors expressed their views in the media during the pandemic. In their televised performances (particularly concerning the supposed effects of hydroxychloroquine), professionals from the same discipline sometimes contradicted each other, to the surprise of viewers. It left people with the impression that medicine was in a state of total confusion.

Demands began flying in for doctors to be more specific: when speaking, they should distinguish between their personal opinion, and the consensus in the field at hand.

Furthermore, “we must obviously use the language of hypothesis, and remember that the truths we express today are not yet fully supported by science,” stated Jean-Marcel Mourgues, vice-president of the National Council of the Order of Physicians, in the magazine Marianne in July. This statement was made following 10 complaints filed by the organisation against doctors for their public statements made about Covid-19. Medical ethics stipulate a precautionary principle, i.e. during any appearance in the media, downplaying one’s remarks about a disease for which science has not yet been able to establish solid knowledge. Given the magnitude of the Covid-19 pandemic, doctors play a key role. Distinguishing personal opinion from consensus has become more important than ever.